As artificial intelligence systems become increasingly sophisticated, we’re witnessing a fundamental shift in how we approach their design and implementation. While the industry spent considerable time perfecting « prompt engineering, » we’ve quickly evolved into the era of « context engineering. » Now, as I observe current trends and speak with practitioners in the field, I believe we’re on the cusp of the next major evolution: API and MCP (Model Context Protocol) engineering.

This progression isn’t merely about changing terminology—it represents a fundamental shift in how we architect AI systems for production environments. The transition from crafting clever prompts to engineering comprehensive context, and now to designing interactive API capabilities, reflects the maturing needs of AI applications in enterprise settings.

Evolution from Prompt Engineering to API/MCP Engineering: The Next Frontier in AI System Development

The Limitations of Current Approaches

The current landscape reveals significant gaps that necessitate this evolution. Context engineering, while a substantial improvement over simple prompt engineering, still operates within constraints that limit its effectiveness for complex, multi-step workflows. Users and AI systems frequently find themselves in lengthy back-and-forth exchanges—what the French aptly call « aller-retour »—that could be eliminated through better system design.

The core issue lies in the reactive nature of current implementations. Even with sophisticated context engineering, AI systems respond to individual requests without the capability to orchestrate complex, multi-step processes autonomously. This limitation becomes particularly apparent when dealing with enterprise workflows that require coordination between multiple tools, databases, and external services.

Moreover, the lack of standardization in how AI systems interact with external tools creates an « M×N problem »—every AI application needs custom integrations with every tool or service it interacts with. This fragmentation leads to duplicated effort, inconsistent implementations, and systems that are difficult to maintain or scale.

The Rise of MCP Engineering

The Model Context Protocol,, represents a significant step toward solving these challenges.

MCP provides a standardized interface for connecting AI models with external tools and data sources, similar to how HTTP standardized web communications. However, the real breakthrough comes not just from the protocol itself, but from how it enables a new approach to AI system design.

MCP engineering goes beyond simply connecting tools—it involves designing interactive API capabilities that can handle complex queries without requiring constant human intervention. This means creating API descriptions that include not just what a tool does, but how it can be composed with other tools, what its dependencies are, and how it fits into larger workflows.

The key insight is that API descriptions must become more sophisticated. Traditional API documentation focuses on individual endpoints and their parameters. In the MCP engineering paradigm, descriptions need to include:

- Workflow dependencies: Which APIs must be called before others

- Interactive patterns: How the API supports multi-step processes

- Contextual requirements: What information needs to be maintained across calls

- Composition guidelines: How the API integrates with other tools in complex workflows

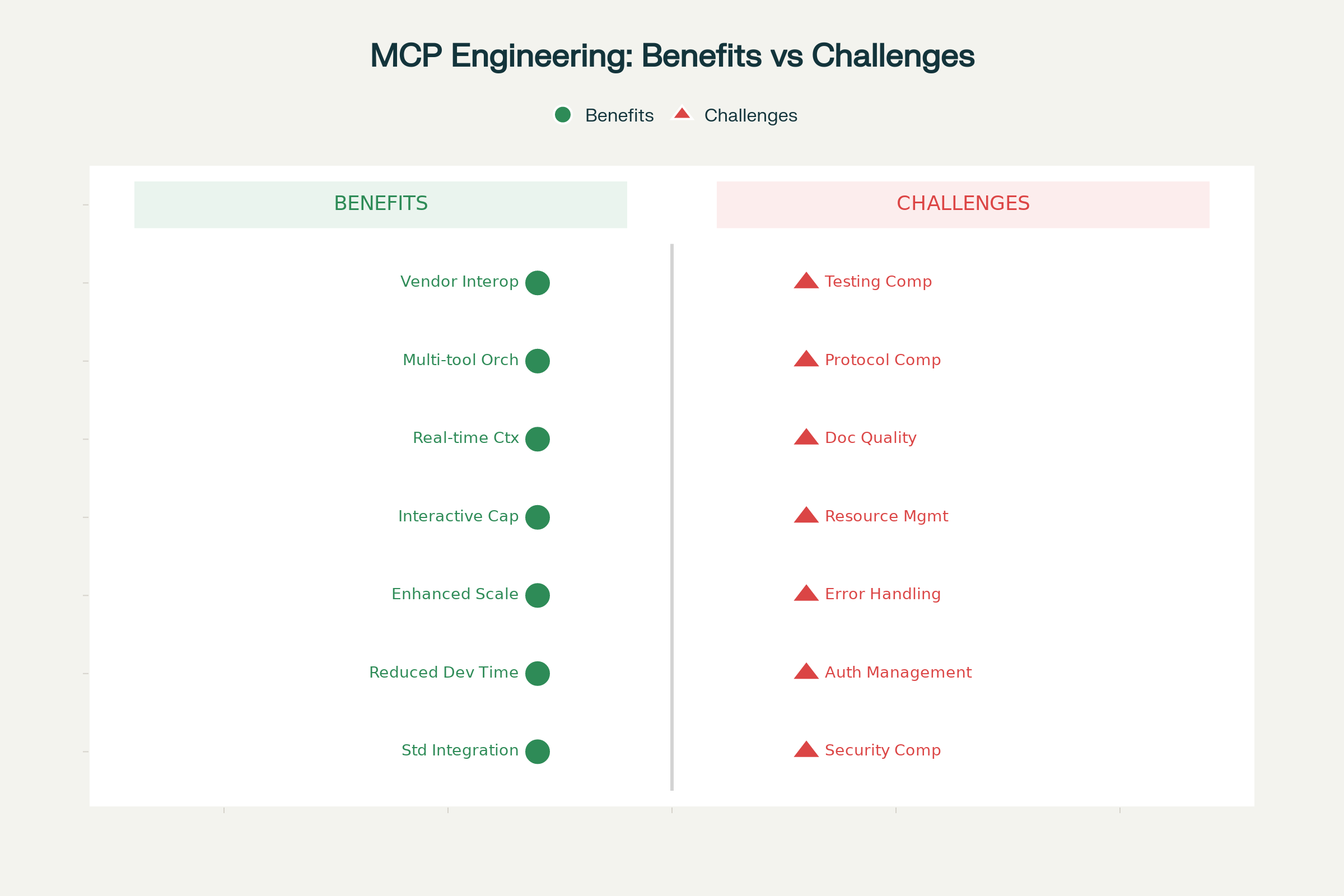

MCP/API Engineering: Weighing the Benefits Against the Challenges

Technical Implications and Requirements

This evolution demands a fundamental rethinking of how we design and document APIs. Interactive API design requires several new capabilities that traditional REST APIs weren’t designed to handle.

Enhanced API Descriptions

The descriptions must evolve from simple parameter lists to comprehensive interaction specifications. This includes defining not just what each endpoint does, but how it participates in larger workflows. For complex queries, the API description should include examples of multi-step processes, dependency graphs, and conditional logic patterns.

State Management and Context Persistence

Unlike traditional stateless APIs, MCP-enabled systems need to maintain context across multiple interactions. This requires new patterns for session management, context threading, and state synchronization between different tools in a workflow.

Error Handling and Recovery

Complex workflows introduce new failure modes that simple APIs don’t encounter. MCP engineering requires sophisticated error handling strategies that can manage partial failures, rollback operations, and recovery mechanisms across multiple connected systems.

Security and Authorization

When AI systems can orchestrate complex workflows automatically, security becomes paramount. This includes implementing proper access controls, audit trails, and permission boundaries to ensure that automated processes don’t exceed their intended scope.

Practical Implementation Strategies

Based on current best practices and emerging patterns, several key strategies are essential for successful MCP engineering implementation:

1. Design for Composability

APIs should be designed with composition in mind from the outset. This means creating endpoints that can be easily chained together, with clear input/output contracts that enable smooth data flow between different tools.

2. Implement Progressive Disclosure

Rather than overwhelming AI systems with every possible capability, implement progressive disclosure patterns where basic capabilities are exposed first, with more complex features available as needed.

3. Prioritize Documentation Quality

The quality of API descriptions becomes critical when AI systems are the primary consumers. Documentation should include not just technical specifications, but semantic descriptions that help AI systems understand the intent and proper usage of each capability.

4. Build in Observability

Complex workflows require comprehensive monitoring and debugging capabilities. This includes detailed logging, performance metrics, and tools for understanding how different components interact in practice.

Industry Adoption and Future Outlook

The adoption of MCP is accelerating rapidly across the industry. Major platforms including Claude Desktop, VS Code, GitHub Copilot, and numerous enterprise AI platforms are implementing MCP support. This growing ecosystem effect is creating a virtuous cycle where more tools support MCP, making it more valuable for developers to implement.

The enterprise adoption is particularly notable. Companies are finding that MCP’s standardized approach significantly reduces the complexity of integrating AI capabilities into their existing workflows. Instead of building custom integrations for each AI use case, they can implement a single MCP interface that works across multiple AI platforms.

Looking ahead, several trends are shaping the future of MCP engineering:

Ecosystem Maturation

The MCP ecosystem is rapidly expanding, with thousands of server implementations and growing community contributions. This maturation is driving standardization of common patterns and best practices.

AI-First API Design

APIs are increasingly being designed with AI consumption as a primary consideration. This represents a fundamental shift from human-first design to AI-first design, with implications for everything from data formats to error handling patterns.

Autonomous Workflow Orchestration

The ultimate goal is AI systems that can autonomously orchestrate complex workflows without human intervention. This requires APIs that can support sophisticated decision-making, conditional logic, and error recovery at the protocol level.

Recommendations for Practitioners

For organizations looking to prepare for this evolution, several strategic recommendations emerge from current best practices:

1. Invest in API Description Quality

The quality of your API descriptions will directly impact how effectively AI systems can use your tools. Invest in comprehensive documentation that includes not just technical specifications, but usage patterns, workflow examples, and integration guidelines.

2. Design for Interoperability

Avoid vendor lock-in by designing systems that adhere to open standards like MCP. This enables greater flexibility and reduces the risk of being trapped in proprietary ecosystems.

3. Implement Robust Security

With AI systems capable of orchestrating complex workflows, security becomes critical. Implement comprehensive access controls, audit logging, and permission management from the beginning.

4. Plan for Scale

MCP-enabled workflows can generate significant API traffic as AI systems orchestrate multiple tools simultaneously. Design systems with appropriate rate limiting, caching, and performance monitoring capabilities.

5. Focus on Developer Experience

The success of MCP engineering depends on developer adoption. Prioritize clear documentation, good tooling, and comprehensive examples to encourage widespread implementation.

The Road Ahead

The evolution from prompt engineering to context engineering to API/MCP engineering represents more than just technological progress—it reflects the maturation of AI systems from experimental tools to production-ready platforms. This progression is driven by the increasing demands of enterprise applications that require reliable, scalable, and secure AI capabilities.

The next phase will likely see the emergence of AI-native architectures that are designed from the ground up to support autonomous AI workflows. These systems will go beyond current approaches by providing native support for AI decision-making, workflow orchestration, and cross-system coordination.

As we look toward 2025 and beyond, the organizations that succeed will be those that recognize this evolution early and invest in building the infrastructure, skills, and processes needed to support this new paradigm. The shift to API/MCP engineering isn’t just a technical change—it’s a fundamental reimagining of how AI systems interact with the digital world.

The future belongs to AI systems that can seamlessly navigate complex workflows, coordinate multiple tools, and deliver sophisticated outcomes with minimal human intervention. By embracing MCP engineering principles today, we can build the foundation for this AI-enabled future.

This evolution from prompt engineering to API/MCP engineering represents a natural progression in AI system development. As we move forward, the focus will shift from crafting perfect prompts to architecting intelligent systems that can autonomously navigate complex digital environments. The organizations that recognize and prepare for this shift will be best positioned to leverage the full potential of AI in their operations.

⁂